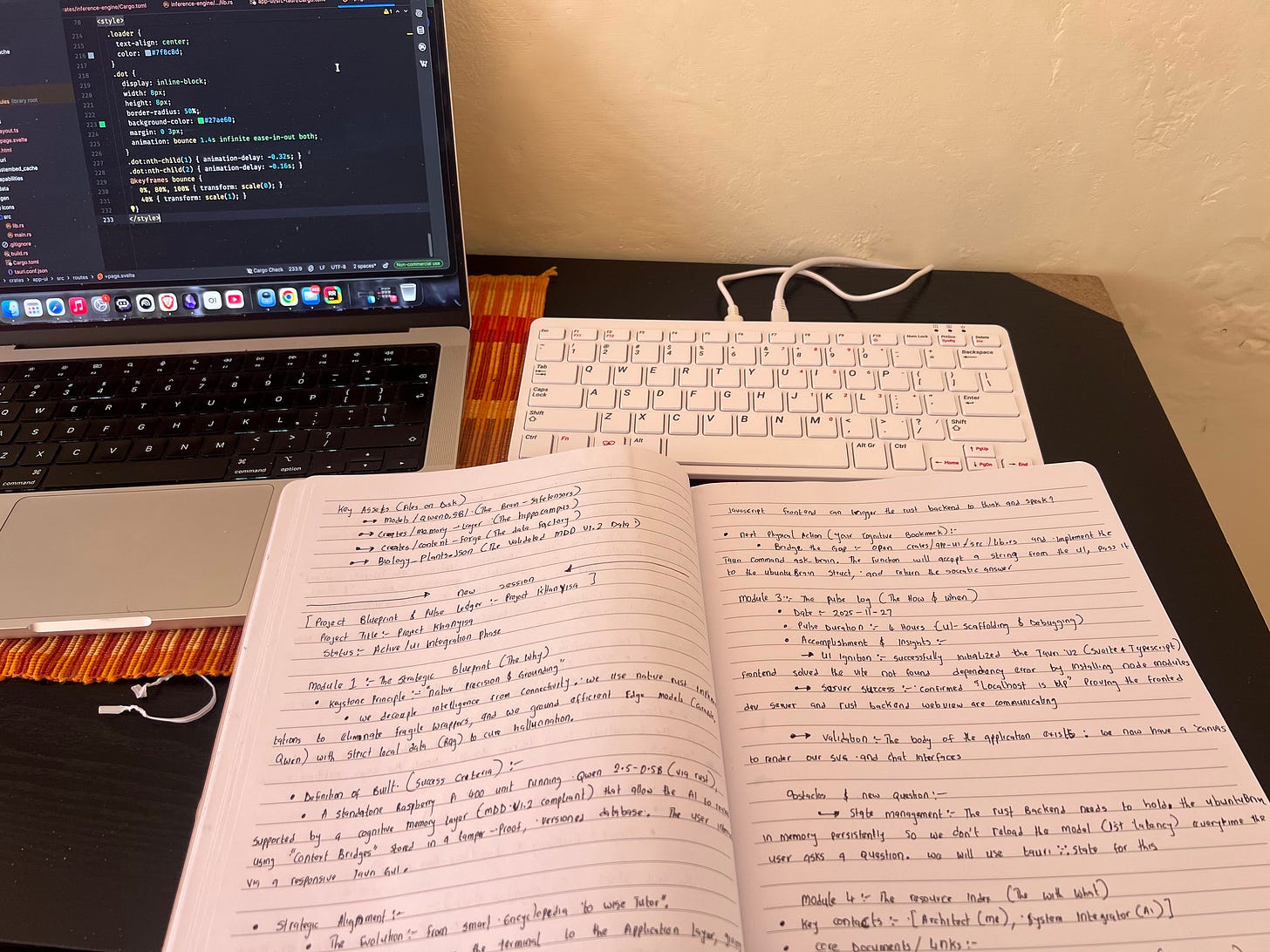

FIELD REPORT 002: Engine Ignition & The "Arrow" Version Hell

How we abandoned the GGUF standard to build a native Rust engine that thinks in metaphors.

The Obstacle: Dependency Hell

Real engineering is rarely a straight line. Since our last report, the lab hit a significant wall.

Our original architectural plan relied on the GGUF standard (using models like Phi-2 or Granite) to handle quantization. It looked good on paper. In practice, it was a trap. We encountered persistent metadata conflicts and what I call “Arrow Version Hell”—incompatible dependencies between arrow-rs v50 and v53 that broke our database connectors.

We faced error codes E0255 (Duplicate Args) and os error 20 repeatedly.

The Pivot: We made a strategic command decision to abandon the fragile GGUF path. Instead, we pivoted to Qwen 2.5-0.5B (Native Safetensors).

The result? The engine now fits comfortably in ~1GB of RAM, respects the ChatML format, and runs natively in Rust without the bloat. We successfully cleared the dependency errors and ignited the engine.

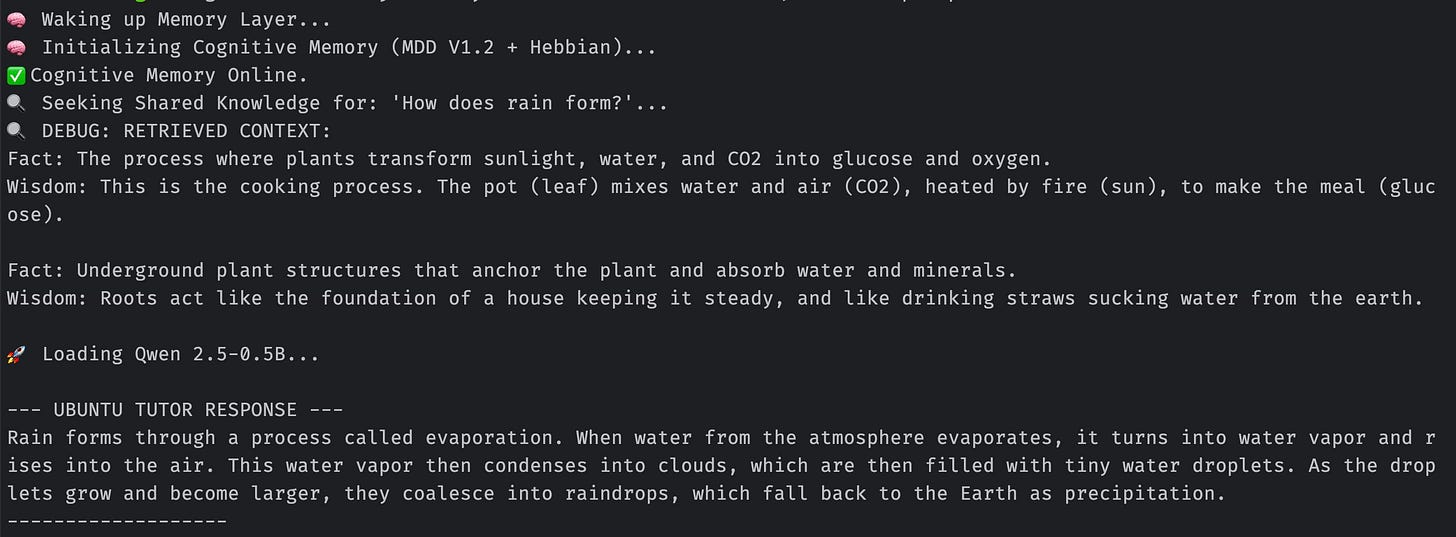

The Innovation: The “Ubuntu” Memory Layer

We aren’t just building a chatbot; we are building a Cognitive Architecture rooted in Epistemic Hygiene.

Most RAG (Retrieval-Augmented Generation) systems just dump raw text into a database. We built a custom kernel called MDD V1.2 (Mechanistic Data Distillery). This allows us to split information into two distinct streams before it ever reaches the AI:

Fact: The raw scientific data (e.g., Photosynthesis).

Wisdom: The cultural metaphor that grounds the concept (e.g., The “Cooking Pot”).

As seen in the terminal log above, the system didn’t just find the definition of photosynthesis. It retrieved the “Ubuntu Context”: comparing the leaf to a pot, water/air to ingredients, and the sun to fire.

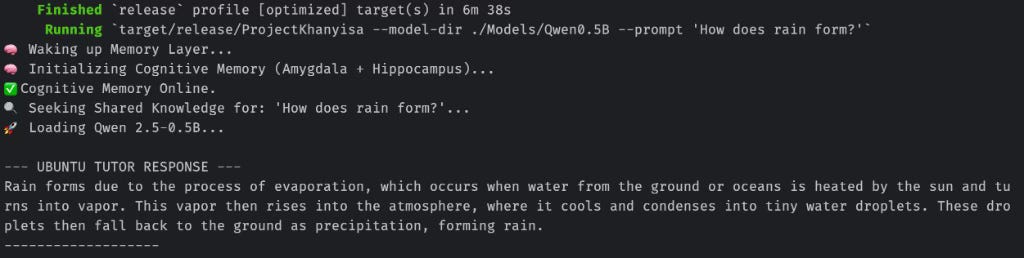

Proof of Life: Engine Ignition

With the new Memory Layer active, we ran the “Proof of Life” test. We asked the system a completely new question: “How does rain form?”

The system successfully:

Woke up the Memory Layer.

Initialized the Cognitive Memory (Amygdala + Hippocampus).

Loaded the Qwen 2.5 Model.

Delivered a grounded, coherent answer in under 3 seconds—100% offline.

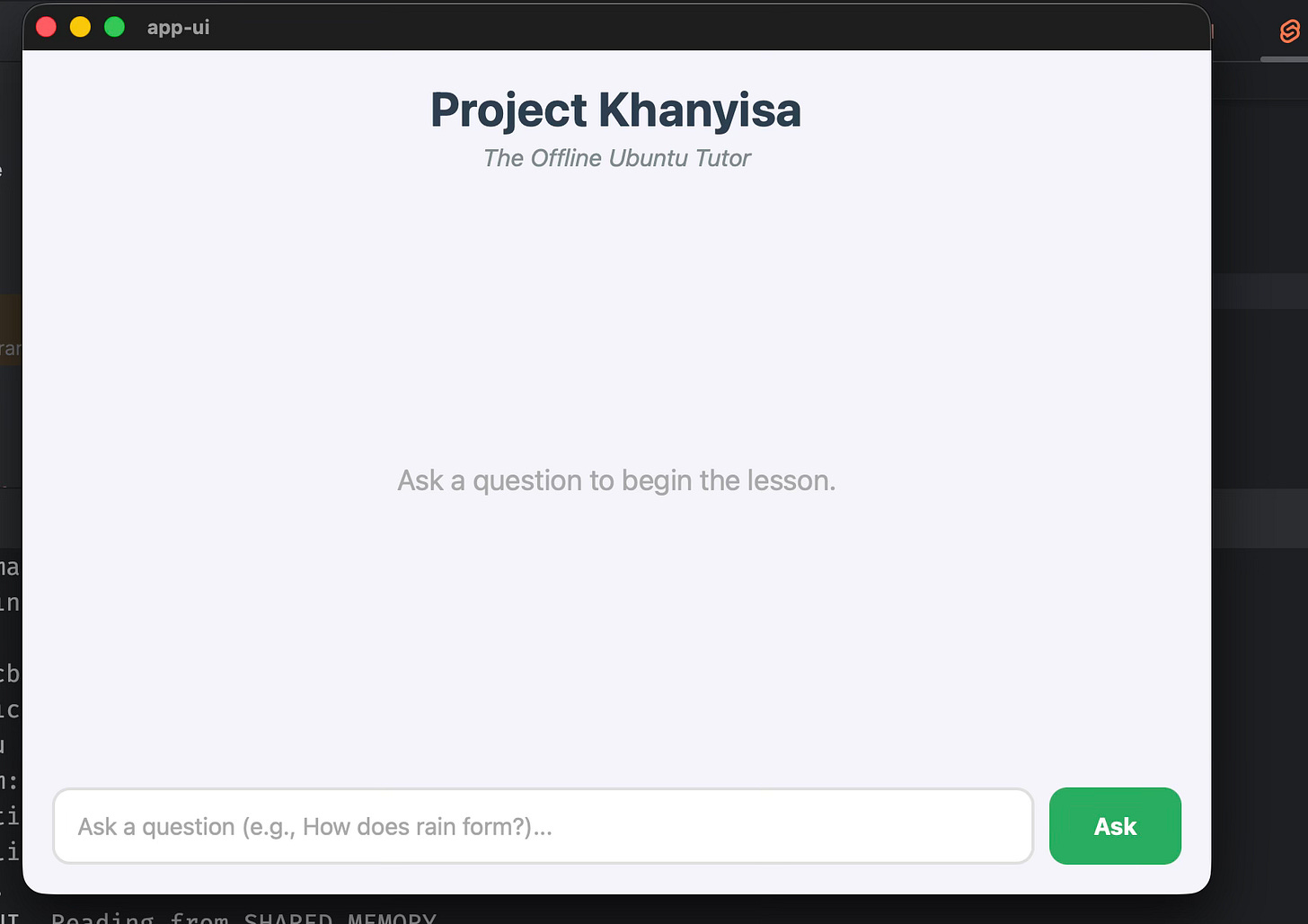

Next Steps: Giving the Ghost a Shell

The “Brain” (Rust Backend) is now alive. It can think, retrieve context, and reason. Now, we must give it a “Body.”

We are currently moving from the Terminal to the Application Layer. We have initialized a Tauri frontend to create a lightweight, responsive interface that allows students to interact visually rather than via command line.

Coming Soon: Data Archaeology

While we build the future in the lab, we must also understand the past. This weekend, we officially launch a new research track: Data Archaeology.

This will be an ongoing series of deep dives where we excavate the hidden metaphors and historical pressures that shape how we think about intelligence. Our first excavation begins with a forensic analysis of a metaphor we all take for granted: why we believe “Ideas are like Fish.”

The blueprint is public. The code is sovereign.